The ability to resend messages easily has always been a strong feature of our Interoperability capabilities.

With v2024.3 coming out soon (available already now as Developer Preview) out already we've made this even easier!

Now from within the Visual Trace itself you can find next to the Message Header a Resend button and you can click it and arrive at the usual Resend Message Editor (without having to go through the Message Viewer and search for your specific message).

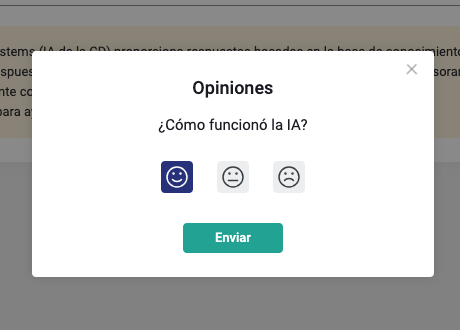

Here's an example of what this looks like:

.png)

See also from the related Release Notes.